|

Introduction Let me start by asking you a question. When you are driving your car, what is one of the key indicators that something is wrong, that it may need a tune-up, or worse still, that it is about to break down? There is a reason why hearing is our critical danger and warning sense. It is always on (even when we are asleep) and it works in a 360-degree sphere, so we can hear things we cannot see. Also, our brain, through what we hear, recognises patterns very effectively so we notice a change in sound that may indicate a problem. Yet even with how effective our sense of hearing is I have been surprised on many occasions by the number of training simulators, both military and industrial that overlook the importance of sound in their trainers. I have spoken to users of some of the most advanced (and expensive) military flight sims and been told over and over that the sound was poor quality, not very useful and not designed in a way that aids the user. Virtual Reality VR is rapidly proving to be an effective format for providing training for vehicle and machinery users. Being able to place a user INSIDE a virtual vehicle and have them operate it in a virtual world allows for realistic training and the virtual nature of the space ensures safety. The audio capabilities for VR surpass almost any other format of media, and we are still discovering just how effective VR audio is at providing useful feedback and enhancing the realism of VR environments. We can completely surround the user in audio that responds to their actions and can be used to indicate events in the virtual world. Moreover, well implemented VR audio can make a massive impact on the value of a trainer far in excess of its cost. Need to simulate a critical machinery failure? This can be done almost entirely with sound, it will indicate an issue the user cannot even see and does not require expensive graphic representation in many cases. So, lets have a look at a couple of scenarios for simulators and how well-designed audio could support them. Industrial For an industrial training simulator designed to train heavy machinery operation, the audio feedback provides information confirming nominal operation. Essentially if everything sounds good, then everything is likely working correctly. For equipment used for digging, audio feedback can inform the user of the types of materials being processed. A drill of any kind, from your cordless home drill right up to a machine rig will alter its sound as it operates. Strain or stress will alter the sound it makes and cutting through harder materials will sound different. In this way you can simulate not only, operational issues, but potentially reflect exactly what is being drilled based on the sound it makes. For training in critical failure scenarios audio is probably more important than other aspects of the sim. Engine or equipment issues, heavy vehicle performance and emergency situations are almost always indicated through the sounds you hear before any other indicator. A Hydro station turbine performs under constant stress and shutdown of this type of equipment is time consuming and costly. Capturing the sound of this equipment when working optimally allows immediate and easy reference at any time. Is your equipment working properly? Listen to how it currently sounds, then compare it to the reference recording for optimal operation. The “all around us” nature of sound should never be underestimated. Operating many large industrial machines has the constant inherent issue that the operator cannot see much of their environment or even most of the machine they are using. But if something goes wrong behind them they will often hear it, even if they do not see it. Many pieces of industrial equipment are huge and very noisy, as a consequence the operators must use hearing protection. But even with hearing protection the vibrations of potential issues can carry through to the operator. Many training simulators cannot provide haptic feedback (vibrations and movement) but again sound can provide this vibration information using low frequency sound output. Military When working on a VR infantry training simulator I was tasked with providing the sounds that matched the firearms being used by the troops using the simulator. So, I was told the priority was for the gun in the hand of the user to sound realistic. This was a good start to creating a useful trainer. However, when I asked about the sounds to use for the virtual AI opponents I was told just to use anything, the sound of an AK47 would be fine. I found this strange. My time in basic training many years ago reinforced to me that one of the most important steps in any engagement was to ascertain the biggest current threat, i.e., who was trying to kill you and how they were doing it. If you talk to any combat soldier you will find that many of them learn very quickly to recognise the sounds of various enemy weapons. This can mean the difference between life and death in the seconds you have to respond in a combat situation. What you hear is likely the first warning you have of a possible threat, spending time looking around to judge the situation may often get you killed, so your hearing at that point in time is critical. -Direction of enemy threat -Nature of threat: single weapon being fired or group or weapons -Type of weapon being fired: Is it automatic weapon fire, or a high calibre rifle? The difference between these two tells you the potential accuracy and penetration of the incoming fire and may well determine where you should seek cover. -Distance: After a while you learn that the quality of the sound can inform you of how far away the sound is coming from -Reflections: Echoes can provide even further information on where the threat is coming from as a sound will echo more when it travels through a built-up area such as building walls. Even within military vehicle simulators the audio can indicate systems failure, potential enemy threats, types of threat, direction of threat etc. Knowing that here is a potential imminent engine failure in your fighter plane because you hear a change in its sounds a second before the warning indicator lights up might just save your life. Vehicles Engine performance, road surface, weather conditions, status of your load (freight moving around, or loose ropes and straps) are all extremely important to simulate during training to ensure operators gain experience dealing with dangerous situations. All of these examples are also scenarios where audio can inform the user of exactly what the issue is and often the how bad the situation is. The main reason we train anyone in operating various vehicles is to ensure they can do so safely, for their own well being and for the safety of others. Providing situations to test a user’s response to potentially dangerous situations allows assessors to judge the operator’s suitability and equally important allows the user themselves to gain confidence in their ability to handle a range of scenarios and know that can respond in the best way. Summary Creating training simulators for military and industrial uses can be very expensive and it is important that these products are effective. Lives are quite literally dependant on people being trained properly. Good quality audio is important for the reasons stated in this article, but more importantly any amount spent on adding and improving audio feedback to a simulator will have a more significant impact on the effectiveness of the training than money spent on other aspects of the product. You could simulate all aspects of training safety with audio alone and know that the users understood exactly what the issue was and would never miss the problem. This is not the case with information to our other senses. As a developer you could add a huge range of situations to your training simulator utilising only audio functionality which is often faster to implement than other aspects of development. Utilising effective audio design for training is cost effective, impactful and most importantly improves the effectiveness of the all safety training across all industries.

2 Comments

Introduction

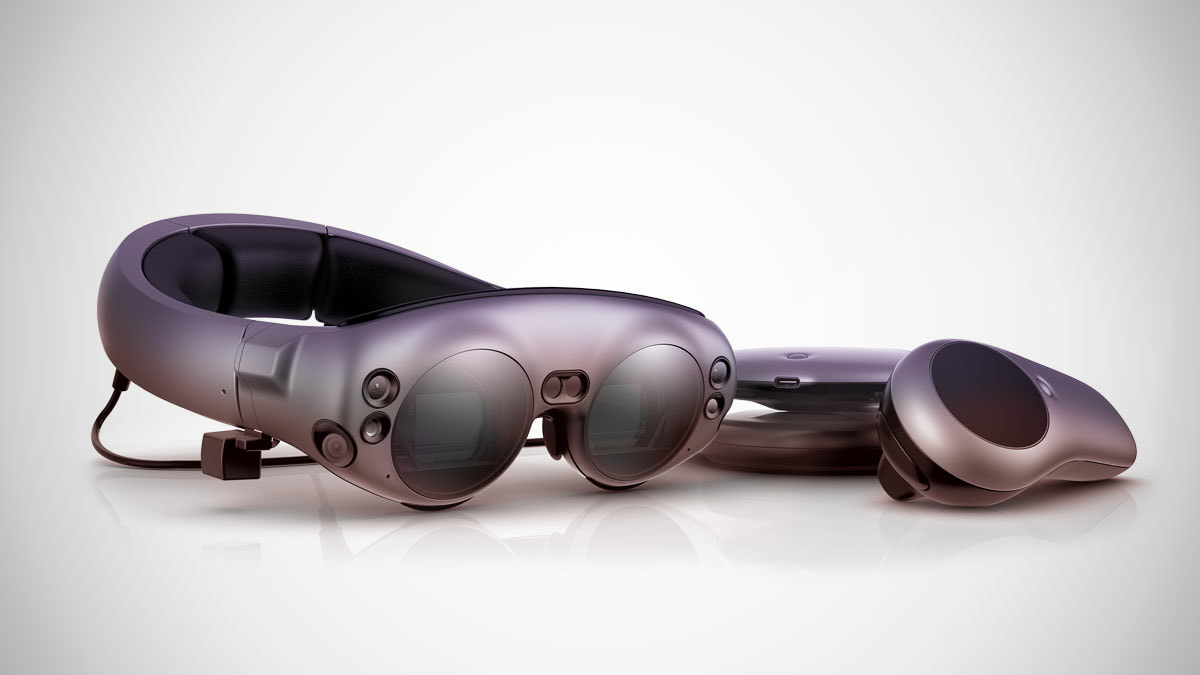

Any new platform of technology needs a reason for people to adopt it. There is seldom a single incredible app or game that tips everyone over the edge, but more a series of products that have mass market appeal and are noticeable enough that people will decide to take the plunge into the new device. Last Week Sony announced that the PlayStation VR had reached 3 million sales. Not bad, but still a small percentage of the overall 80 million PS4 owners. The growth of VR is strong, and AR is going to be hot on its tail, the question is, where are our killer apps for these platforms? Two important Factors There are two factors in my mind that are affecting this situation. The first is Time. It takes time to create AAA games and applications, in some cases many years. This technology is still new enough that developers first need time to understand the format and then time to create high quality content. Maybe the next 12 months will see the release of a few of these longer development titles. The second factor is the reason for this article and that is Design. I have spent several years working and “playing” in the VR and AR spaces, but I have noticed a worrying trend specifically among the larger developers. Too many of them are trying to shoehorn existing genres, and in some cases existing content onto the VR platform often in the hope of making a quick dollar. I understand that there is a risk with creating any new projects and that porting existing content may be a way of testing the waters and learning some of the lessons, but this trend is a symptom of a wider problem and I think it is inhibiting these new formats rather than helping them. VR is very Different VR is a very different platform and a different experience to traditional game formats and we need to embrace that if we want to succeed in this area. Traditional “run and gun” style games simply do not work and neither do many other popular game genres. Playing sport in VR, controlling a strategy game and even traditional role-playing formats all need, not just a major overhaul in design, but an entirely new approach from the ground up. 360 VR cinema is the same. 100 years of cinematography and editing techniques are almost entirely redundant when we create content where the viewer can be looking anywhere at any time. Once we place our audience into the actual narrative environment for both VR film and VR games we have to approach the entire process differently. There are some incredible examples of what we can achieve with these formats. Acclaimed director Alejandro González Iñárritu’s Carne y Arena 360 video experience continues to sell out tickets almost as fast as it puts them on sale. This is an experience that has been carefully crafted to utilise the potential of the technology. And there are various examples of games and applications that have taken clever approaches to these new formats. Stop thinking about limitations I think the issue is we are still talking about what VR CANNOT do, or how we must adopt existing content to fit the LIMITATIONS of the format. It feels like we have been given the ability to fly and we are discussing the difficulties with swimming. One advantage of porting old formats to the new platforms is to figure out what works and what does not, in this way it is a legitimate exercise. But we have about 50 examples of FPS games not working on VR, so I think it might be time to move on. It is obviously not easy to simply design a killer app, but anyone who experiences VR or 360 content will understand pretty quickly which aspects of the experience work well and which do not. I have at least a dozen designs for VR content floating around inside my head that simply came from analysing the experiences I enjoyed and comparing them with those I did not. Rapid movement and combat mechanics defined many of our traditional game styles on consoles and computers. Mobile platforms introduced the concept of quickly swiping our fingers or guiding objects with multiple taps. We adapted our control methods to better suit the device capabilities. VR places us into an environment that completely surrounds us and yet so many experiences only utilise a tiny portion of that space. If rapid movement causes nausea in VR then don’t use it. If controlling 100 units in a strategic battle is impossible in VR then perhaps the user should be placed onto the battle field to command 5 characters instead of floating over it. God’s view games are obviously not the best use of VR…or are they? Creating a killer app is not going to be easy, and nor should it be. It should be the culmination of an understanding of how the tech can be utilised effectively with a design whose surface simplicity belies its underlying clever creation. What we can do If movement in VR is difficult, then maybe the audience should be static, OR the user could be carried or moved around. (Think wheelchair or sitting on the shoulder of a giant) Movement is not the problem, the audience controlling that movement currently is. Putting the audience on “rails” allows the developer to exactly tune the experience to avoid issues We have a 360 sphere to inhabit in VR, please use it, both for movement potential as well as drawing the audiences attention to anywhere around them. (Look up AND look down) Experiences in vehicles work well as the audience is seated and controls movement of the vehicle. This also can reduce nausea as the fixed points of a cockpit can counter the nausea. We can use arm gestures and movement combined with button input, combined with upper body movement. This is a massive amount of input data to work with. Spatial audio is incredibly powerful at both supporting narrative and providing data to the audience, use it! Scale is incredible in VR. When I can stand right in front of a Death Stalker or look out the window and see the USS Enterprise at what I perceive as 1:1 scale then every previous gaming experience I have ever had pales in comparison. VR s incredible in this manner. Use this to your advantage. VR isolates us from the real world. A Headset and Headphones blocks out real world sensory input. We are placed into a virtual world like diving into water. Embrace that ability, surround your audiences with both visual and auditory information. Feed their senses and they will love being inside the world you created. Conclusion All of our previous formats of media have positioned the audience at a window where they could peer into another world and view the tiny portion of that world visible from the window. VR and 360 cinema allows all of us to step through the window and enter those worlds. By contrast AR allows us to reach through the window and pull objects and characters through into our world. We cannot hope to achieve this level of immersion if we persist with our current designs. Pushing my face up against the window is not the same as letting me step through it. During my time at Magic Leap my task was to design, create, think-about and research how audio would function on an AR wearable device and what the audience would experience. From that list above the most important item was “think-about”. All of the new reality formats differ in many ways from traditional media, but AR wearable devices introduce a whole new series of challenges and none more difficult than presenting our audiences with what they will hear through an AR wearable device. I want to start by offering my definition of AR vs VR. In VR we place our audience into a virtual world. We surround them with all the necessary aspects of that world to convince them that they have indeed been transported to a realistic and dynamic environment. We override their senses with the content that we have created in an attempt to coerce the audience that this virtual world actually exists. In some ways this is a reasonably simple task. A VR headset and headphones mostly obscure the audience’s sight and sound, so we are depriving them of real world input and the human brain will quite quickly latch on to the virtual information and process it as valid. (At least to some extent.) In AR the audience’s world still exists, they still receive sensory information for what they see and hear and instead we attempt to insert virtual objects into the real world for the audience to experience. This is a much tougher proposition for our brains to accept. At all times we have the comparison of the real world right there beside the created virtual objects. We must blend how the virtual objects look and sound into the space the audience is in. Visually this means an object needs to occlude and be occluded by objects in the real world, it should cast shadows and it should both affect and be affected by the lighting of the world space. From an audio point of view all the same factors apply. A virtual object should emit sound as though it was in our world, and this is incredibly hard, in fact right now with the existing technology it is TOO hard. There are too many factors and too many calculations of how sound behaves for us to replicate it perfectly on any device. There is also the very real challenge that we still do not 100% understand exactly how humans hear and perceive sound under all circumstances. We can create the most realistic looking object and place it into a real-world space with perfect lighting and occlusion, but if the sound of that object is not convincing to the audience then the entire illusion will collapse. So, the question becomes, how do we create convincing audio for AR? The first step is to understand the limitations of devices such as Magic Leap One, Hololens and other wearable AR devices. Remember limitations are not always problems to solve, they can be opportunities to utilize. Things that are difficult for AR audio.

I am not going to explain each of these aspects in this article, you will just need to trust me for now that these things are all pretty hard to achieve. (I may go through them in more details in the future) The nature of AR means that the sound created by the device needs to blend with the real world, so the device dos not obscure what we can hear in the real world. Our AR sound needs to blend with the sounds in the audience’s space. This alone creates a huge range of possibilities. Is the audience sitting quietly in their lounge room, or are they travelling on a noisy train at peak hour? Remember because the device allows real world sound in to the audience’s ears, it can also bleed sound out so that others around the user may hear it as well. So a virtual avatar speaking to the audience may be speaking too softly, or perhaps you could utilize the devices inbuilt microphones to detect the ambient noise level of the audience’s space and adjust the level of the avatar’ speech. Here is where we can analyses the creative opportunities of that single function and see how we can create an engaging experience for the audience. Let us imagine that you are enjoying a virtual storyteller app on your AR device. A virtual avatar stands in front of you (or perhaps sits next to you if the device detects an empty seat). The avatar describes a mythical tale to you as you journey home on the train. This app has been cleverly designed to account for a range of environmental situations. Initially the avatar speaks to you in a normal voice as it tells its tale. But as the journey continues the clever design of the developers is demonstrated.

As more people get on board your train the ambient noise level increases, it becomes harder for you to hear the story. You ask the avatar to speak up. The application detects the ambient noise levels and increases the intensity of the avatar’s voice. I do not mean the volume, I mean the actual intensity. When this app was created, the developers recorded the vocals at various intensity levels. If you ask me to speak more loudly it is not just an increase in volume, but the entire way I speak will change. This application reflects that change by dynamically switching the dialogue to a more forceful speaking voice, so the words carry to the listener over increased background noise. As still more people get on the train it once again gets harder to hear the avatar’s story. If you ask the avatar to speak even louder you risk annoying the other passengers as the volume will become loud enough for others to hear. This time you request the avatar to come closer to make it easier to hear. Visually you see the avatar lean forward to speak in your ear. The sound changes to a stage whisper. The avatar is now speaking “closer” to your ear, it is a more direct sound and as such does not need the same level of intensity as the pervious speech. Essentially the volume is slightly louder to the listener, but also the frequency content has shifted to represented a sound source closer to your ear. Finally as you near home the train has emptied of most of the passengers and you ask the avatar to speak more softly. The avatar leans back (sits in the now empty chair next to you) and resumes the normal default speaking manner. There are various reasons why this “works” as an AR application. The audio consists only of human speech. That means it occupies a nice safe frequency range that the device can produce easily. Also, human hearing’s primary function is to input speech, it is familiar to us and so our brains help us to understand what we are hearing. There is only a single object producing sound and we are so familiar with how humans work that we expect the sound to come from the avatars mouth, we expect that sound to be clearer and “louder” when the avatar moves closer to our ears. So, our perceptions are doing a lot to support this virtual illusion. When the technology cannot provide 100% of the functionality we need, we can help the situation by utilizing human perceptions and this works best if we align with normal expectations. The more you break away from expectations the more you need the technology to support. A mouse is small, quiet and has a high-pitched squeak, an elephant is large loud and has a low-pitched roar. If we adhere to this the human brain will do much of the work for us. There really are limitless opportunities for amazing and engaging applications that can be created for AR. The critical point is that they may not be the sorts of experiences we are used to enjoying. The lack of low frequency support means we cannot easily create giant rumbly Hollywood explosions, giant robots, and earth-shaking events. So perhaps we shouldn’t try to. The various other limitations on vision, sound and user input mean we need to unlearn a few old habits and adopt a few new ones. What is very apparent is that we can no longer have a single person in a bubble design a game or an app and then expect the various disciplines to just make it happen. We need experts across all aspects of production to collaborate carefully to consider what is possible and how to make the most of that, rather than waste time trying to achieve production elements that are currently very hard or impossible to create. As a freelancer I have the advantage of working on a great and wide range of projects and design styles with many different teams. This provides me with an insight you often don’t get with teams that focus on the same type of content over and over. I think AR is a time for many of us to embrace concepts and ideas that may be different and even scary to us as we dig into what really is possible with a new format that has so much potential to amaze. |

AuthorStephan Schütze has been recording sounds for over twenty years. This journal logs his thoughts and experiences Categories

All

Archives

April 2019

|

RSS Feed

RSS Feed