|

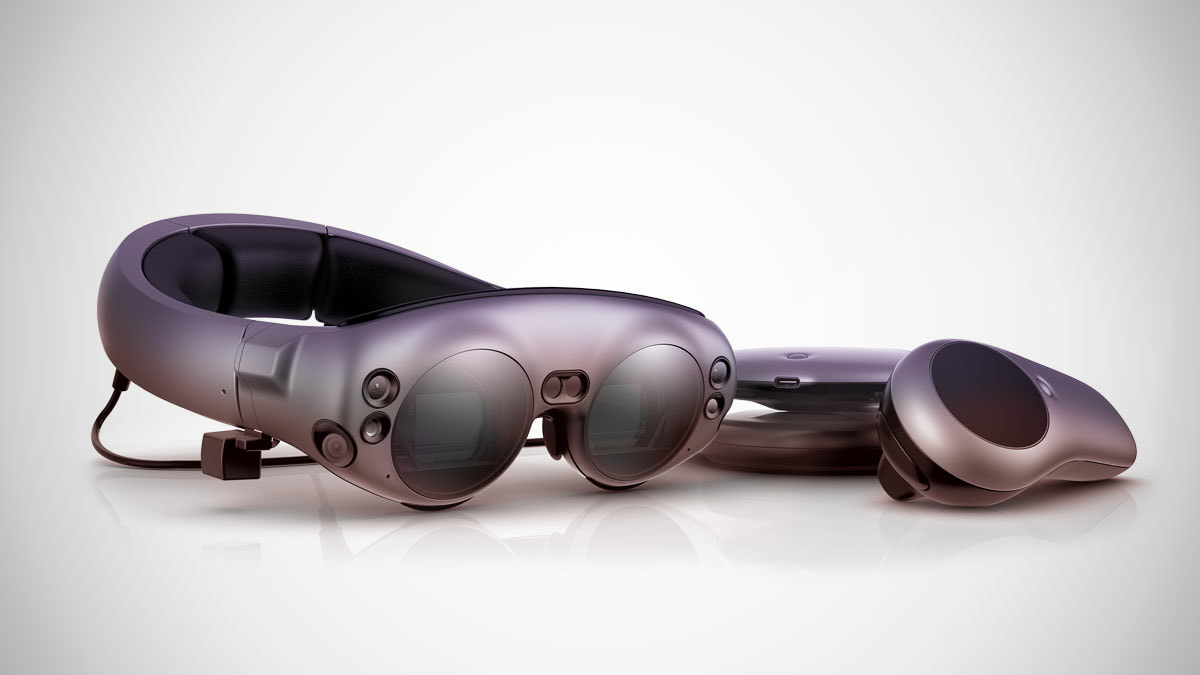

During my time at Magic Leap my task was to design, create, think-about and research how audio would function on an AR wearable device and what the audience would experience. From that list above the most important item was “think-about”. All of the new reality formats differ in many ways from traditional media, but AR wearable devices introduce a whole new series of challenges and none more difficult than presenting our audiences with what they will hear through an AR wearable device. I want to start by offering my definition of AR vs VR. In VR we place our audience into a virtual world. We surround them with all the necessary aspects of that world to convince them that they have indeed been transported to a realistic and dynamic environment. We override their senses with the content that we have created in an attempt to coerce the audience that this virtual world actually exists. In some ways this is a reasonably simple task. A VR headset and headphones mostly obscure the audience’s sight and sound, so we are depriving them of real world input and the human brain will quite quickly latch on to the virtual information and process it as valid. (At least to some extent.) In AR the audience’s world still exists, they still receive sensory information for what they see and hear and instead we attempt to insert virtual objects into the real world for the audience to experience. This is a much tougher proposition for our brains to accept. At all times we have the comparison of the real world right there beside the created virtual objects. We must blend how the virtual objects look and sound into the space the audience is in. Visually this means an object needs to occlude and be occluded by objects in the real world, it should cast shadows and it should both affect and be affected by the lighting of the world space. From an audio point of view all the same factors apply. A virtual object should emit sound as though it was in our world, and this is incredibly hard, in fact right now with the existing technology it is TOO hard. There are too many factors and too many calculations of how sound behaves for us to replicate it perfectly on any device. There is also the very real challenge that we still do not 100% understand exactly how humans hear and perceive sound under all circumstances. We can create the most realistic looking object and place it into a real-world space with perfect lighting and occlusion, but if the sound of that object is not convincing to the audience then the entire illusion will collapse. So, the question becomes, how do we create convincing audio for AR? The first step is to understand the limitations of devices such as Magic Leap One, Hololens and other wearable AR devices. Remember limitations are not always problems to solve, they can be opportunities to utilize. Things that are difficult for AR audio.

I am not going to explain each of these aspects in this article, you will just need to trust me for now that these things are all pretty hard to achieve. (I may go through them in more details in the future) The nature of AR means that the sound created by the device needs to blend with the real world, so the device dos not obscure what we can hear in the real world. Our AR sound needs to blend with the sounds in the audience’s space. This alone creates a huge range of possibilities. Is the audience sitting quietly in their lounge room, or are they travelling on a noisy train at peak hour? Remember because the device allows real world sound in to the audience’s ears, it can also bleed sound out so that others around the user may hear it as well. So a virtual avatar speaking to the audience may be speaking too softly, or perhaps you could utilize the devices inbuilt microphones to detect the ambient noise level of the audience’s space and adjust the level of the avatar’ speech. Here is where we can analyses the creative opportunities of that single function and see how we can create an engaging experience for the audience. Let us imagine that you are enjoying a virtual storyteller app on your AR device. A virtual avatar stands in front of you (or perhaps sits next to you if the device detects an empty seat). The avatar describes a mythical tale to you as you journey home on the train. This app has been cleverly designed to account for a range of environmental situations. Initially the avatar speaks to you in a normal voice as it tells its tale. But as the journey continues the clever design of the developers is demonstrated.

As more people get on board your train the ambient noise level increases, it becomes harder for you to hear the story. You ask the avatar to speak up. The application detects the ambient noise levels and increases the intensity of the avatar’s voice. I do not mean the volume, I mean the actual intensity. When this app was created, the developers recorded the vocals at various intensity levels. If you ask me to speak more loudly it is not just an increase in volume, but the entire way I speak will change. This application reflects that change by dynamically switching the dialogue to a more forceful speaking voice, so the words carry to the listener over increased background noise. As still more people get on the train it once again gets harder to hear the avatar’s story. If you ask the avatar to speak even louder you risk annoying the other passengers as the volume will become loud enough for others to hear. This time you request the avatar to come closer to make it easier to hear. Visually you see the avatar lean forward to speak in your ear. The sound changes to a stage whisper. The avatar is now speaking “closer” to your ear, it is a more direct sound and as such does not need the same level of intensity as the pervious speech. Essentially the volume is slightly louder to the listener, but also the frequency content has shifted to represented a sound source closer to your ear. Finally as you near home the train has emptied of most of the passengers and you ask the avatar to speak more softly. The avatar leans back (sits in the now empty chair next to you) and resumes the normal default speaking manner. There are various reasons why this “works” as an AR application. The audio consists only of human speech. That means it occupies a nice safe frequency range that the device can produce easily. Also, human hearing’s primary function is to input speech, it is familiar to us and so our brains help us to understand what we are hearing. There is only a single object producing sound and we are so familiar with how humans work that we expect the sound to come from the avatars mouth, we expect that sound to be clearer and “louder” when the avatar moves closer to our ears. So, our perceptions are doing a lot to support this virtual illusion. When the technology cannot provide 100% of the functionality we need, we can help the situation by utilizing human perceptions and this works best if we align with normal expectations. The more you break away from expectations the more you need the technology to support. A mouse is small, quiet and has a high-pitched squeak, an elephant is large loud and has a low-pitched roar. If we adhere to this the human brain will do much of the work for us. There really are limitless opportunities for amazing and engaging applications that can be created for AR. The critical point is that they may not be the sorts of experiences we are used to enjoying. The lack of low frequency support means we cannot easily create giant rumbly Hollywood explosions, giant robots, and earth-shaking events. So perhaps we shouldn’t try to. The various other limitations on vision, sound and user input mean we need to unlearn a few old habits and adopt a few new ones. What is very apparent is that we can no longer have a single person in a bubble design a game or an app and then expect the various disciplines to just make it happen. We need experts across all aspects of production to collaborate carefully to consider what is possible and how to make the most of that, rather than waste time trying to achieve production elements that are currently very hard or impossible to create. As a freelancer I have the advantage of working on a great and wide range of projects and design styles with many different teams. This provides me with an insight you often don’t get with teams that focus on the same type of content over and over. I think AR is a time for many of us to embrace concepts and ideas that may be different and even scary to us as we dig into what really is possible with a new format that has so much potential to amaze.

0 Comments

Leave a Reply. |

AuthorStephan Schütze has been recording sounds for over twenty years. This journal logs his thoughts and experiences Categories

All

Archives

April 2019

|

RSS Feed

RSS Feed